Our Products and Services.

Plug and Play Sensor Solution

Our sensor solution provides a basis for assisting the development of ADAS and autonomous driving algorithms. The base version targets ease of use. All you need to do is to mount it on the car, connect to one of the ethernet ports and start recording data. All components are placed on the single roof mounted unit, are pre-calibrated and synchronized.

We can use our sensor solution design to implement individual solutions that fit our customer's specific use cases. The IEEE 1588-2008 Precision Time Protocol (PTP) can be used to synchronize our roof mounted sensors to the target vehicle's series sensors or other external reference sensors as well.

Software Bundle

By purchasing one of our stock or custom sensor solutions, you will get access to our software bundle, which includes several useful tools.

Data Viewer

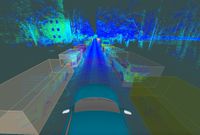

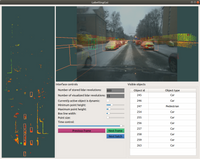

The viewer enables an easy way of inspecting captured data from the recorded files and can visualize it with detected objects from post processing, real time algorithms or manual labels. With real time rendering and instant time axis scrolling even large recordings of hundreds of gigabytes can be viewed easily on any of the common desktop operating systems. With the viewer being free for everyone, recorded and processed data can be shared with and viewed by anyone.

Automatic Post Processing

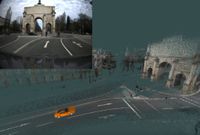

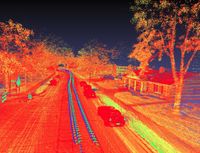

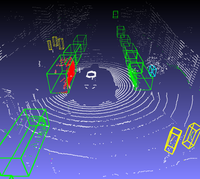

Simultaneous Localization and Mapping Simultaneous localization and mapping is using the lidar's range and IMU data together with an optional simultaneously recorded GNSS signal and computes the localization of the ego-vehicle throughout the recorded sequence. The localization together with a dynamic object filter produce a static map of the entire recording.

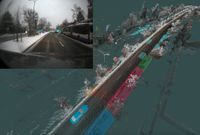

Deep Learning The software bundle offers the option to automatically compute semantic segmentations and object instance segmentations using pre trained versions of the Pyramid Scene Parsing Network and Mask R-CNN deep neural network and fuses these with the pointcloud. The data viewer allows for inspection of these results as well.

Sensor Calibration

This module can recalibrate our roof mounted sensor solution and also be used to calibrate lidar, camera and inertial sensors from external suppliers to the roof mounted sensors.

Data Exporter

All recorded and post-processed data is exported to open source ROS messages and bundled in ROS bag files. Only default message types from the original ROS codebase are used. This way anyone can use the recorded and processed data without any dependencies to our software stack.

3D Labeling Tool and Labeling Services

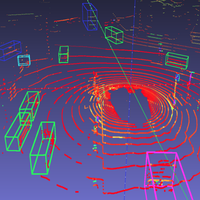

Implemented within our same software stack, but marketed as a separate product/service, we have developed a 3D labeling tool, which aids in enriching recorded data with semi-manually/automatically annotated rectangular cuboids. Our annotation tool enables us to label large quantities of consecutively recorded data faster than the competition by using basic concepts similar to those used by object tracking algorithms.

In the first pass with the help of the static map all static objects can be annotated in record breaking speeds compared to traditional annotation efforts.

In the second pass the dynamic objects are labelled. Since motion of the commonly found object classes of interest is rather plausibly defined, motion models are used to aid the annotation process. Given two annotations of an object at different temporal locations its relative position and orientation can be calculated at a different temporal location from the interpolation of the object's trajectory and the motion of the ego vehicle. Hence for the intervals where the target objects have a consistent motion the annotator can skip though the majority of the frames and expedite the annotation process.

Software Development Services

aCVC, a Computer Vision Company UG is first and foremost a software development company. As confident software engineers, we are certain we can create excellent software products for a wide variety of use cases and requirements, and as such we are offering our development services for clients. Even though our strategy is to target specific tenders, you (dear reader) can feel free to inquire about our services, if you are looking for software development partners, and we can discuss what we can do for you.

Portfolio of Relevant References in Computer Vision

Deep Learning

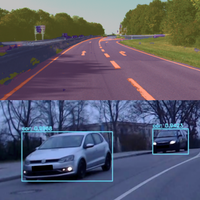

2D Object Detection YOLOnet is one of the most popular open source projects in this domain. We have experience integrating YOLOnet's versions from v3 onwards, including the "tiny'" releases, in projects.

3D Object Detection We have experience in using, as well as training, Voxelnet and PointPillars for various classes and for various lidar scanners. These are two of the most commonly used open source projects that are intended for 3D object detection in lidar data. PointPillars is the more recent of the two and from our experience it achieves very similar detection quality to Voxelnet at much shorter execution times.

Semantic Segmentation We have experience in both training and deploying PSPNet and SegNet for the purpose of semantic image segmentation in various circumstances that include both camera and lidar sensors. In the lidar case the pointcloud was structured as an image that made parsing of the data compatible with these types of networks.

Object Instance Segmentation We have experience in both training and deploying the Mask R-CNN, as well as knowledge on the usage of some of the common labelling tools that are used for creating compatible training data. The Mask R-CNN can be used for both object detection as well as object instance segmentation tasks. Object instance segmentations however are especially useful when dealing with sensor setups that also include lidar data.

Sensor and Algorithm Fusion

Fusion of various sensors and algorithms has many advantages, as their receptive fields differ and can be complimentary to one another. A project can benefit vastly by combining various classically implemented algorithms (i.e. non deep learning based) together with various such deep learning techniques.

Simultaneous Localization and Mapping

Lidar SLAM We are actively using Google Cartographer on our own sensor setup with the Livox Horizon scanner and we have tweaked the hyperparameters accordingly to obtain robust and accurate localization. We have also implemented a version that can be run in real time and provide live localization data to other ROS nodes. We have also implemented Google Cartographer configurations that work with different sensor setups employing multiple lidars. We also have a lot of experience in using and heavily modifying the Berkeley Localization and Mapping open source project code.

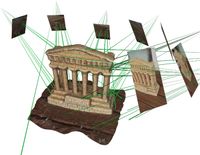

Visual SLAM When only cameras (or a single camera for that matter) are available we cannot deploy the algorithms of the previous section. Therefore, we have abstracted and deployed the Direct Sparse Odometry and the ORB-SLAM open source projects in several projects requiring the usage of visual SLAM.

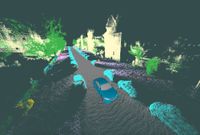

Sensor Simulation

Carla, the MIT licensed open source simulator for autonomous driving research is a valuable tool for autonomous driving and ADAS related projects. Our main usage of the tool was to drive a car on autopilot around the various scenes that the tool provides while generating simulated raw sensor data and extracting ground truth objects and segmentation labels. We used these to train deep learning models that were used for object detection with real sensor data by allowing access to virtually unlimited and automatically generated training data. Another smaller use case was to implement a collision avoidance function which prevented a person controlling the virtual car to accidentally or deliberately collide with other objects. From these projects we have gained a lot of knowledge of how to use this tool in scenarios that can benefit from such an integration.

3D Reconstruction

Our experience ranges from the reconstruction with a single monocular camera to the use of multiple cameras in stereo and non-stereo configurations. We have implemented multiple algorithms in this domain as well as designed algorithms and improvements thereof. Our experience covers the use and integration of a vast number of libraries and open source projects in this domain.

Eye Tracking

Our eyes are usually considered a window to our brain because the photoreceptor cells that line the retina are directly connected to the brain through the optic nerve. But due to the non-uniform visual acuity (ability to distinguish fine details) we move our eye in order to point our fovea (area with the highest acuity) to the areas where we want to acquire information. The motion of the eyes can be separated into distinct eye movement types that can be further analysed together with the visual content in order to provide us with valuable information regarding the interesting parts of a scene, the state of the observed human, the difficulty of a task, and many more. In order to better understand these factors the experiments can be moved outside of the lab and to more immersive scenarios through the use of wearable eye-tracking devices.